As we stand at the intersection of visual computing and cloud infrastructure, I’m witnessing a revolutionary transformation in how we process and evaluate 3D data. Point clouds—those collections of spatial coordinates that represent 3D objects—have become the backbone of immersive technologies. Yet the challenge has always been quality assessment, especially when we lack reference data to compare against.

The most exciting development I’ve been tracking is the emergence of no-reference quality metrics for point cloud assessment. These intelligent systems can evaluate the visual quality of 3D data without requiring pristine originals for comparison—a game-changer for cloud-based applications where reference data is often unavailable or impractical to maintain.

Quality – The PST-PCQA Framework: A Breakthrough Approach

Recent research has introduced PST-PCQA, a patch-based quality assessment framework that evaluates point clouds by analyzing individual patches and integrating both local and global features. What makes this approach particularly valuable for cloud environments is its lightweight computational footprint—essential when processing resources are distributed and need to be efficiently allocated.

The framework operates by:

1. Extracting structural and textural features from small patches of the point cloud

2. Combining these features using correlation weights

3. Predicting overall quality scores that closely align with human perception

Unlike traditional methods that require extensive computational resources, this approach can be implemented across distributed cloud systems without creating bottlenecks. The beauty lies in its efficiency—fewer parameters to learn means faster processing times and lower resource consumption.

Quality – Cloud Computing Implications

The cloud computing ecosystem stands to benefit tremendously from these advances. When we can accurately assess point cloud quality without references, we enable:

- Real-time quality monitoring of streaming 3D content

- Adaptive compression algorithms that respond to available bandwidth

- More efficient resource allocation across distributed rendering nodes

- Enhanced user experiences in cloud-based VR/AR applications

For cloud service providers, these capabilities translate to cost savings and improved user satisfaction. The lightweight nature of these algorithms means they can be deployed even on edge devices with limited computational capacity, expanding the reach of cloud-based 3D applications.

Distortion Management in Cloud Environments

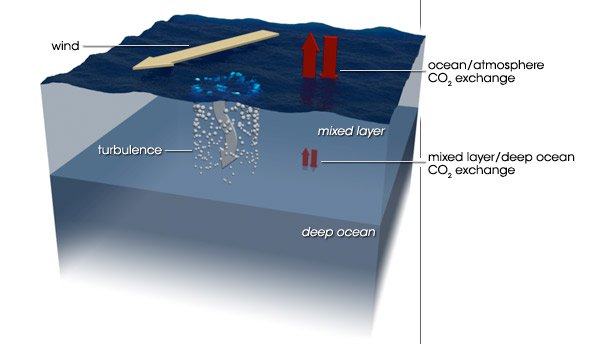

Point clouds traversing cloud infrastructure face multiple potential distortions. From acquisition artifacts to compression losses during transmission to rendering inconsistencies at display time, the quality degradation points are numerous. Traditional cloud architectures weren’t designed with these specific challenges in mind.

What’s particularly promising about the PST-PCQA approach is its ability to detect and quantify various types of distortions, including geometry inconsistencies and color artifacts. This comprehensive assessment capability allows cloud systems to identify where in the pipeline quality degradation is occurring and implement targeted mitigations.

The Intersection of Human Perception and Machine Assessment

Perhaps the most intriguing aspect of this research is how it bridges human visual perception with machine learning models. Cloud computing has traditionally focused on technical metrics like bandwidth utilization and computational efficiency. However, the ultimate measure of success is user satisfaction.

The correlation between PST-PCQA predictions and Mean Opinion Scores from human evaluators suggests we’re getting closer to algorithms that truly understand what matters to human observers. For cloud providers, this means being able to optimize for what actually impacts the user experience rather than technical metrics that may or may not correlate with satisfaction.

Future Directions

Looking ahead, I see tremendous potential for these quality assessment frameworks to evolve into predictive models that can anticipate and prevent quality issues before they manifest. Imagine cloud systems that can dynamically adjust rendering parameters, compression ratios, and transmission protocols based on predicted quality impacts rather than reactive measurements.

The integration of these quality metrics with other cloud optimization algorithms could create self-tuning systems that continually improve the quality-to-resource ratio. As computational capabilities expand and algorithms become more sophisticated, we may reach a point where point cloud quality in cloud environments consistently matches or exceeds locally rendered alternatives.

For those of us working at this fascinating intersection of visual geometry and cloud computing, these developments represent not just technical advances but stepping stones toward truly immersive distributed computing experiences. The future of visual cloud computing isn’t just about more pixels or points—it’s about ensuring every point contributes meaningfully to the user experience.