The pursuit of machines that perceive and understand the world as humans do has been a defining quest in artificial intelligence research. Today, we stand at a fascinating inflection point where several technological streams are converging to create systems with unprecedented spatial reasoning abilities. The recent developments in geometric-aware AI models like Aether represent not merely incremental improvements but a fundamental shift in how machines interpret and interact with physical reality.

When we examine the historical trajectory of visual AI systems, we can identify distinct evolutionary phases. Early computer vision focused primarily on classification and recognition tasks—identifying objects within static images. This gradually expanded to tracking objects across video frames, but these systems lacked true understanding of three-dimensional space. Recent breakthroughs have pushed beyond these limitations, integrating geometric reasoning with generative capabilities.

Systems – The Three Pillars of Visual Intelligence

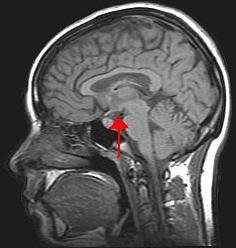

The architectural philosophy underlying these advances rests on three interconnected capabilities that mirror human cognitive processes. First is perception—specifically 4D reconstruction that captures both spatial arrangements and temporal dynamics. This gives machines the ability to build comprehensive mental models of their environments, much as humans do intuitively.

The second pillar is prediction, allowing systems to forecast environmental changes under different conditions or actions. This predictive capability has roots in early reinforcement learning models but has now evolved to incorporate sophisticated understanding of physics and causality.

Finally, planning capabilities enable these systems to determine optimal action sequences to achieve specified goals. This represents perhaps the most sophisticated level of intelligence, requiring both perceptual accuracy and predictive power to function effectively.

Systems – Synthetic-to-Real: A Paradigm Shift

Perhaps most remarkable in recent developments is the ability of systems trained entirely on synthetic data to generalize to real-world scenarios. This represents a profound shift in the machine learning paradigm. Historically, the gap between synthetic training environments and real-world deployment has been a significant barrier to practical applications. Systems like Aether demonstrate that with proper geometric grounding, this synthetic-to-real transfer becomes not just possible but remarkably effective.

The implications are far-reaching. Training on synthetic data addresses many of the challenges that have hampered progress in applied AI: the scarcity of comprehensive real-world datasets, privacy concerns associated with data collection, and the prohibitive costs of generating adequately labeled training data. By leveraging synthetic environments where perfect ground truth is available, researchers can develop systems with robust foundations in geometric understanding that translate seamlessly to real-world conditions.

Unifying Reconstruction, Prediction, and Planning

What distinguishes the current wave of innovation from previous approaches is the unification of previously separate technological domains. Rather than treating reconstruction, prediction, and planning as distinct problems requiring specialized solutions, contemporary frameworks integrate these capabilities within unified models.

This integration creates virtuous cycles of improvement. Better geometric reconstruction leads to more accurate predictions; more accurate predictions enable more effective planning; and the feedback from planning outcomes can refine both reconstruction and prediction models. The result is systems that exhibit emergent capabilities beyond what any single component could achieve in isolation.

The technical approach underpinning these advances combines pre-trained video generation models with post-training on synthetic 4D data. This process transforms basic generative models into multi-functional world models capable of understanding depth, camera positioning, and physical interactions. The use of camera trajectory as an action representation is particularly elegant, providing a universal framework for both navigation and manipulation tasks.

Applications and Future Directions

The potential applications of geometrically-aware AI systems span numerous domains. In autonomous vehicles, these technologies enable more sophisticated environmental modeling and prediction of traffic dynamics. In robotics, they allow for more natural manipulation of objects in unstructured environments. In augmented reality, they facilitate seamless integration of virtual elements with physical spaces.

Beyond these immediate applications, the longer-term implications may be even more profound. As these systems continue to evolve, they could fundamentally transform human-computer interaction, enabling more intuitive interfaces that understand not just what users are doing but what they intend to do.

The path forward is not without challenges. Current models still face limitations in processing complex, dynamic scenes with multiple agents. Computational requirements remain substantial, though specialized hardware and optimized algorithms continue to improve efficiency. Perhaps most importantly, these systems will need to develop more sophisticated understanding of causal relationships to fully realize their potential.

Nevertheless, the convergence of geometric awareness with generative modeling represents a watershed moment in AI development. It marks a transition from systems that can recognize and classify to systems that can understand, predict, and plan within physical environments. This evolution mirrors the cognitive capabilities that humans develop naturally through interaction with the world—a pattern recognition that gradually gives way to deeper spatial understanding and eventually to sophisticated planning abilities.

The journey from early computer vision systems to today’s geometrically-aware world models reflects a broader trend in artificial intelligence: the movement from narrow, task-specific solutions toward more general, unified approaches that capture the richness and complexity of human cognition. As these technologies continue to mature, they promise to bring us closer to AI systems that truly understand the world as we do.